New Research Confirms: Google Rankings Don't Guarantee AI Search Visibility

A groundbreaking University of Toronto study reveals that AI search engines like ChatGPT, Claude, and Perplexity source information completely differently than Google. Here's what the GEO research means for your brand visibility strategy.

The way your customers find information is changing faster than most marketing teams realize. Instead of scanning ten blue links, users now ask ChatGPT, Claude, or Perplexity a question and receive a synthesized, conversational answer—often without clicking a single link.

For years, marketers operated on an assumption: if you rank well on Google, you'll be visible everywhere that matters. New academic research from the University of Toronto proves this assumption is dangerously wrong.

The study, titled "Navigating the Shift: A Comparative Analysis of Web Search and Generative AI Response Generation", provides the first large-scale empirical evidence of what many of us have suspected: AI search engines and traditional search engines operate on fundamentally different logics. The sources they consult, the content they favor, and the brands they surface are remarkably different.

This isn't speculation anymore. It's peer-reviewed science. And it has profound implications for every brand competing for visibility in 2026 and beyond.

What the GEO Whitepaper Studied

Researchers from the University of Toronto conducted a systematic comparison of leading generative AI services—GPT-4, Claude, Perplexity, and Gemini—against Google Search. Their analysis spanned over 1,000 ranking-style queries across consumer topics like smartphones, electric cars, airlines, laptops, and credit cards.

The methodology was rigorous. For each query, researchers extracted all cited URLs from AI responses, normalized them to registrable domains, and computed the overlap with Google's top 10 search results. They analyzed:

- Domain overlap: How much do AI sources overlap with Google's top results?

- Source typology: What types of sources do AI engines prefer (brand, earned media, or social)?

- Content freshness: How recent are the sources cited by each system?

- Pre-training bias: How does an LLM's training data influence which brands get surfaced?

The findings paint a clear picture: if you're optimizing only for Google, you're likely invisible to AI.

You can read the full whitepaper here.

Key Findings from the Research

Finding 1: Extremely Low Overlap Between Google and AI Sources

The most striking finding is the near-complete divergence between Google search results and AI-generated sources.

According to the study, GPT-4 shows only 4% domain overlap with Google's top 10 results. Gemini shows 11.1%, Claude shows 12.6%, and Perplexity—the highest—still only reaches 15.2%.

To put this in perspective: if your entire SEO strategy is built around ranking in Google's top 10, you have at best a 1-in-6 chance of appearing in AI-generated answers. At worst, it's 1-in-25.

What this means for brands:

Ranking #1 on Google for your target keywords does not mean ChatGPT, Claude, or Perplexity will mention you when users ask related questions. These are entirely separate visibility games requiring distinct optimization strategies.

The research confirms what marketers have begun to observe anecdotally: brands dominating traditional search are often completely absent from AI responses, while smaller competitors with different content strategies get cited repeatedly.

Finding 2: AI Engines Favor Earned Media Over Social Content

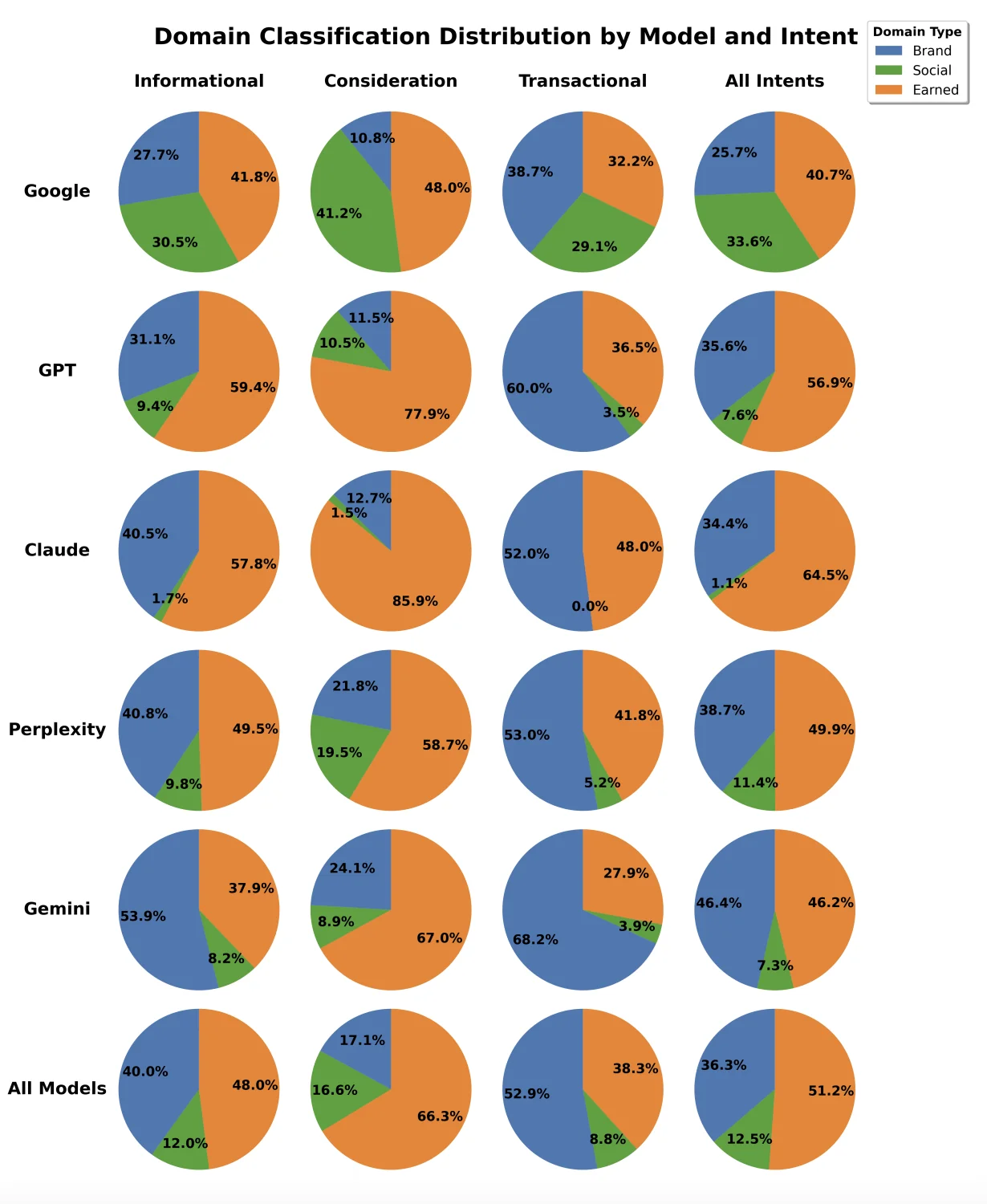

The study classified sources into three categories: brand-owned sites, earned media (independent publications and reviews), and social media (user-generated content like Reddit, forums, YouTube comments).

The findings reveal a dramatic preference difference:

- Google maintains relatively balanced sourcing: 41% earned media, 34% social, 26% brand.

- Claude concentrates heavily on earned media: 65% earned, only 1% social.

- GPT-4 follows a similar pattern: 57% earned, 8% social.

- Perplexity is more balanced but still favors earned: 50% earned, 39% brand.

What this means for brands:

If your AI visibility strategy relies on user-generated content, Reddit threads, or social proof—the types of content that perform well in Google's "real experiences" updates—you may be systematically underrepresented in AI answers.

AI engines appear to prioritize authoritative, editorially-produced content from established publications. Brands that invest in earned media placements, industry publication features, and expert reviews are more likely to be cited than those relying on community buzz.

Finding 3: AI Sources Are Significantly Fresher Than Google Results

Content freshness emerged as a critical differentiator. The researchers measured article age in days across verticals and found consistently younger sources in AI responses.

For consumer electronics queries:

- Claude cited sources with a median age of 62 days

- GPT-4 cited sources averaging 80 days old

- Perplexity averaged 90 days

- Google surfaced content with a median age of 130 days

For automotive queries, the gap was even more pronounced:

- AI models averaged 148-217 days

- Google averaged 493 days

What this means for brands:

AI engines favor recent, updated content. If your website contains evergreen content that hasn't been refreshed in months—or years—it may be deprioritized by generative AI systems, even if it still ranks well on Google.

This has significant implications for content strategy. The traditional SEO approach of "create and forget" doesn't work for AI visibility. Brands need active content maintenance programs that regularly update existing pages with fresh information, new data, and current perspectives.

Finding 4: Pre-Training Bias Shapes Which Brands Get Mentioned

Perhaps the most important finding for competitive positioning: LLM pre-training creates persistent biases that influence which brands are surfaced, regardless of current web content.

The researchers tested this by perturbing the evidence provided to the models. For well-known entities (like Toyota or Samsung), rankings remained stable even when the supporting evidence was shuffled, swapped, or restricted. The model relied on its "internal knowledge" from pre-training rather than the retrieved snippets.

For niche entities, the pattern reversed. Rankings became highly sensitive to the provided evidence because the model lacked strong priors.

Specific data from the study:

- For popular entities, 16% of ranked items appeared without any citation support—the model simply "knew" them

- Toyota and Honda had citation miss rates of only 3-6%

- Less established brands like Infiniti showed miss rates of 73%

What this means for brands:

If your brand is well-established and widely discussed across the web (especially in the data used to train these models), you have an inherent advantage. AI systems will surface you even without explicit citations.

If your brand is newer or operates in a niche category, you must work harder to be included in the real-time retrieved evidence. Your content must be discoverable, authoritative, and explicitly relevant to the query.

This creates a fascinating competitive dynamic: established brands have moats from pre-training, while challengers must win through superior content optimization.

Why Traditional SEO Alone Is No Longer Enough

The research makes one thing abundantly clear: the skills, tactics, and strategies that drive Google rankings do not automatically translate to AI visibility.

Traditional SEO focuses on:

- Keyword optimization and targeting

- Backlink acquisition for domain authority

- Technical site performance

- On-page optimization factors

- Ranking position in search results

AI visibility requires a fundamentally different approach:

- Being cited by authoritative third-party sources

- Maintaining fresh, regularly updated content

- Building presence across earned media channels

- Creating content that directly answers conversational queries

- Understanding how pre-training data shapes model behavior

The study describes this as two distinct "information ecosystems." Google acts as a gateway to documents you must then evaluate. AI engines synthesize information and deliver answers directly. The optimization strategies for each are necessarily different.

This is why the researchers explicitly contrast "Answer Engine Optimization" (AEO) and "Generative Engine Optimization" (GEO) with traditional SEO. Same goal—visibility—but different mechanics entirely.

How Llumos Helps Brands Win in AI Search

The research findings create an urgent mandate: brands need visibility into how they perform across AI search platforms, not just Google. This is precisely what Llumos is built to provide.

Monitor AI Search Visibility Across Platforms

Llumos tracks your brand mentions across ChatGPT, Claude, Gemini, and Perplexity in real-time. Instead of manually testing queries across four different platforms, you get a unified dashboard showing exactly when and where your brand appears—and critically, when it doesn't.

The research found only 4-15% overlap between Google and AI sources. That means monitoring Google rankings tells you almost nothing about your AI visibility. Llumos bridges this gap.

Track Competitor Positioning

The study revealed that AI engines surface different competitive landscapes than Google. Your top Google competitors may not be your top AI competitors.

Llumos shows you which brands are being cited for your target queries across AI platforms. You can identify new competitive threats you didn't know existed and discover positioning gaps where you could win.

Identify Content Gaps That Prevent AI Citation

The research showed that AI engines favor earned media and fresh content. But which specific content gaps are costing you AI visibility?

Llumos analyzes the prompts and queries where your brand is absent and identifies what content, topics, or authority signals you're missing. Instead of guessing why ChatGPT doesn't mention you, you get actionable intelligence on what to create or optimize.

Optimize for AI Discovery, Not Just Rankings

Traditional SEO tools optimize for rankings. Llumos optimizes for citations and recommendations—the new currency of AI search.

The whitepaper found that for popular queries, LLM pre-training dominates. For niche queries, real-time retrieval matters more. Llumos helps you understand which category your brand falls into and what strategies will actually move the needle.

The research is clear: AI search operates on different rules. Llumos is built specifically for this new reality—a GEO-first platform designed to help brands get discovered, cited, and recommended by AI.

The Future of Search Is Generative

The whitepaper concludes with a stark observation: "As user engagement shifts toward AI-powered search, understanding ranking mechanisms becomes essential."

We're witnessing a fundamental restructuring of how information is discovered and consumed. Google isn't going away—but it's no longer the only game in town. ChatGPT processes over 1 billion queries per week. Perplexity is growing rapidly. Claude and Gemini are integrated into enterprise workflows.

For brands, the implications are clear:

-

Measurement must evolve. Tracking Google rankings without tracking AI visibility is like measuring half your market.

-

Content strategy must adapt. Fresh, authoritative, frequently-updated content that earns third-party citations will outperform static SEO-optimized pages.

-

Competitive intelligence must expand. Your AI competitors are not the same as your Google competitors.

-

Early movers will have advantages. The study shows pre-training creates moats. Brands that build AI visibility now will have advantages baked into future model versions.

The brands that act now—while competitors still focus exclusively on traditional SEO—will establish the kind of AI visibility that becomes harder to disrupt over time.

Start Understanding Your AI Visibility Today

The research is peer-reviewed and public. The shift is documented and measurable. The only question is whether your brand will adapt before your competitors do.

Llumos provides the intelligence you need to navigate this shift:

- AI Visibility Scans across ChatGPT, Claude, Gemini, and Perplexity

- Competitive benchmarking showing who's winning AI citations in your category

- Content gap analysis identifying what's missing from your AI optimization strategy

- Trend tracking to measure your AI visibility improvements over time

Stop guessing whether AI mentions you. Start measuring it.

Get your AI Visibility Report →

The future of search is generative. The brands that get cited—and recommended—by AI will capture the customers who never click a link. The research proves the rules have changed. Llumos helps you win by the new ones.

Read the full whitepaper: Navigating the Shift: A Comparative Analysis of Web Search and Generative AI Response Generation